In 1950, two mathematicians named Merrill Flood and Melvin Dresher developed a model which was later formalized and popularized by Albert W. Tucker. That model is now known as the Prisoner’s Dilemma.

What is that? imagine two prisoners who are caught and offered a deal:

1. Betray the other and go free.

2. Stay silent (cooperate with the other prisoner) and risk a harsher sentence only if the other one betrays.

What makes decision harder? The prisoners don’t know what the other will choose.

Their mathematical model proves something surprising: if both cooperate (stay silent), they get a better combined outcome. But the fear that “the other might betray me” leads both to betray, which results in a worse outcome for both. This logic is proven for decades by research in game theory.

The core idea applies in business competition, international politics, relationships, and any scenario where mutual trust without full information leads to better results than selfish actions.

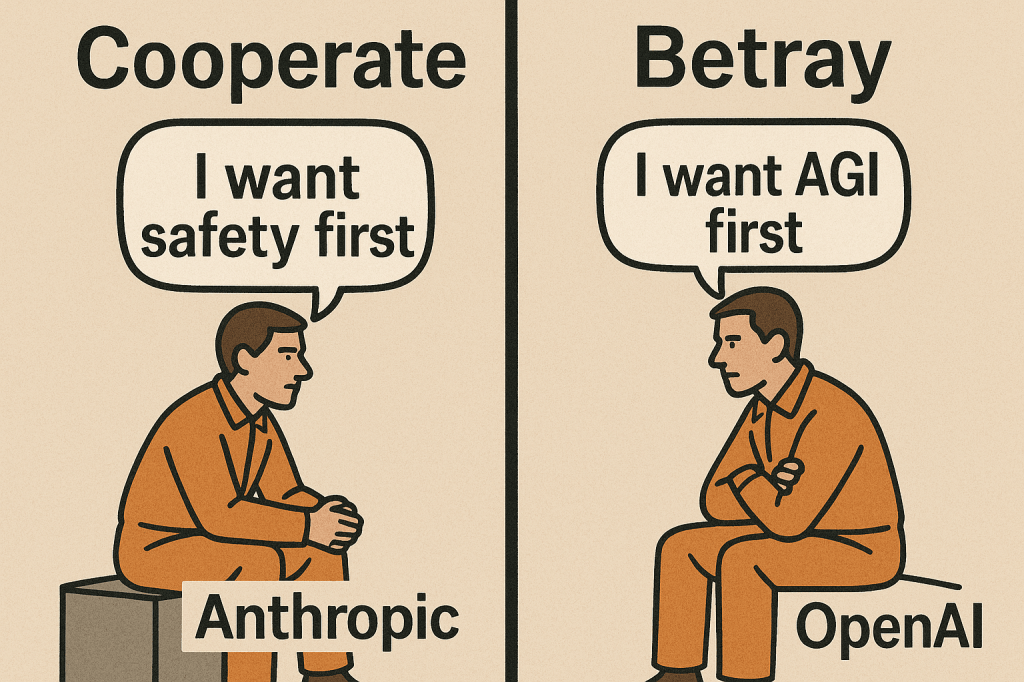

Now, let’s apply this to today’s AI race between companies like OpenAI, Anthropic, and xAI (Grok).

All of them are racing toward AGI (Artificial General Intelligence but the true meaning of AGI is a Machine better than all humans combined).

In AI race, if these companies don’t cooperate with each others, not slowing down for ethical checks, and just prioritize speed over safety, we may end up with a harmful AGI. A super intelligent machine that is not aligned with human values, not safe for us, and hard to control.

The Prisoner’s Dilemma reminds us: fear of betrayal or the other gets to AGI first, leads to the harsher outcomes for all.

What’s changing is the tech is that we’re entering an era of AI creativity, innovation, and power. What’s not changing is human accountability and responsibility.

Let’s be more responsible in how we build AI tools and agents. Let’s cooperate with prioritizing ethics, privacy, safety, and control.

Lets do this for humanity.

Leave a comment